Use custom LLM providers in Claude Code

November 24, 2025

2 min read

Claude Code

has been one of the most popular coding agent this year.

I’ve relied on it heavily for common development tasks.

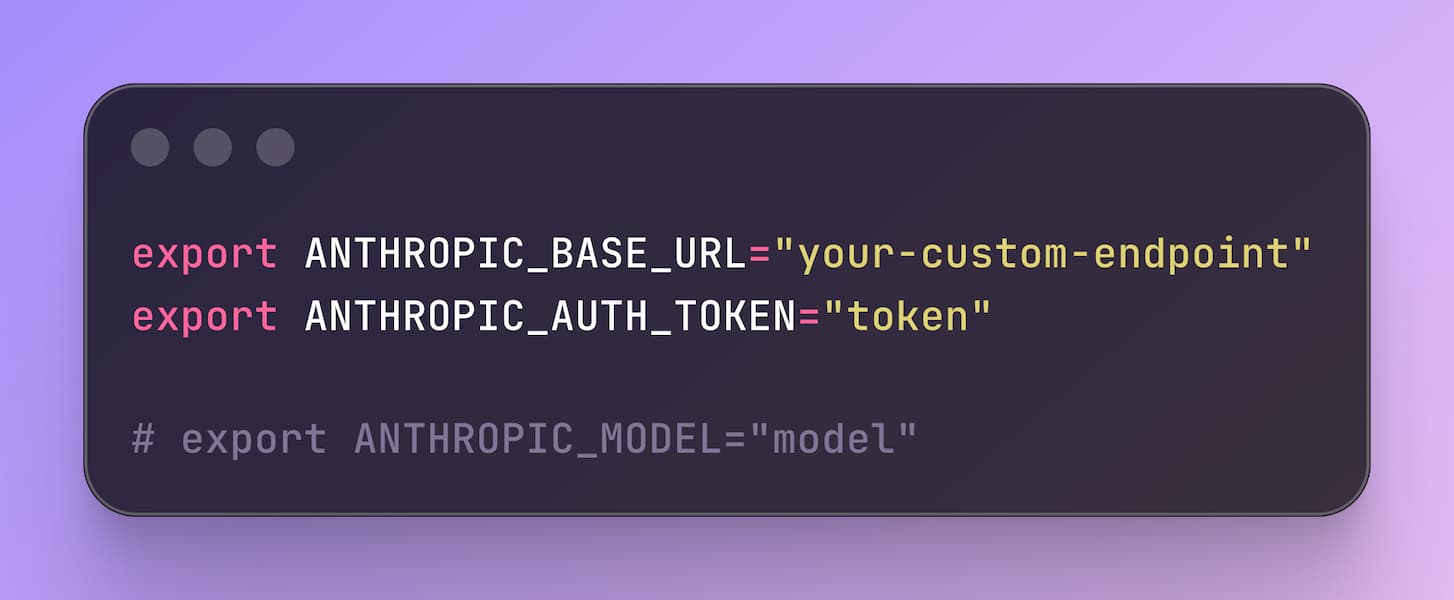

One useful but lesser-known feature is that Claude Code allows you to override the default Anthropic API endpoint using the ANTHROPIC_BASE_URL environment variable.

This is very useful in many scenarios, such as working around quota limits, connecting to custom model endpoints or third-party providers like GitHub Copilot .

Since Claude Code only supports Anthropic messages API , we’ll either need a provider that already supports this format, or use a proxy to translate requests into the Anthropic format.

These environmental variables are documented . Many of the model providers such as Kimi , DeepSeek , Qwen , Z.ai , etc. provide instructions to set up with Claude Code. LLM gateways like OpenRouter also supports Anthropics API out-of-the-box.

GitHub Copilot can also be used as the backend for Claude Code, which is especially valuable in enterprise environments where Anthropic API access may be restricted. We could proxy Claude Code <-> GitHub Copilot API through this copilot-api tool.

npx copilot-api@latest auth

npx copilot-api@latest start --claude-code

What’s more, I would make these an alias in my shell configuration.

For example in Fish Shell :

function kimi

set -x ANTHROPIC_AUTH_TOKEN (op read "op://Private/Moonshot AI/credential")

set -x ANTHROPIC_BASE_URL https://api.moonshot.ai/anthropic

claude $argv[1]

end

Or have a cc alias to launch Claude Code with custom provider easily:

cc() {

export ANTHROPIC_BASE_URL="http://localhost:4141"

export ANTHROPIC_AUTH_TOKEN="dummy"

export ANTHROPIC_MODEL="claude-sonnet-4.5"

export ANTHROPIC_SMALL_FAST_MODEL="gpt-4o-mini"

claude "$@"

}